Abstract

Python is the most widely used programming language in data analysis. In this warmup, we will introduce the most popular and powerful libraries for Network Science (i.e., graph-tool, NetworkX), for data analysis and visualization (Matplotlib, NumPy, SciPy, Pandas, Seaborn), and for Deep Learning on graphs (PyTorch Geometric).

Python

What is python

Python is an interpreted, object-oriented, high-level programming language with dynamic semantics. […]

It is suitable for rapid development and for use as a “glue language” to connect various components (e.g., written in different languages).

Python is one of the most used programming languages[1]

[1]: StackOverflow’s 2021 survey

Why is python so popular?

Its popularity can be rooted to its characteristics

- Python is fast to learn, very versatile and flexible

- It is very high-level, and complex operations can be performed with few lines of code

And to its large user-base:

- Vast assortment of libraries

- Solutions to your problems may be already available (e.g., on StackOverflow)

Some real-world applications

- Data science (loading, processing and plotting of data)

- Scientific and Numeric computing

- Modeling and Simulation

- Web development

- Artificial Intelligence and Machine Learning

- Image and Text processing

- Scripting

How to get python?

Using the default environment that comes with your OS is not a great idea:

- Usually older python versions are shipped

- You cannot upgrade the python version or the libraries freely

- Some functions of your OS may depend on it

You can either:

- Download python

- Use a distribution like Anaconda

Anaconda

Anaconda is a python distribution that packs the most used libraries for data analysis, processing and visualization

Anaconda installations are managed through the conda package manager

Anaconda “distribution” is free and open source

Python virtual environments

A virtual environment is a Python environment such that the Python interpreter, libraries and scripts installed into it are isolated from those installed in other virtual environments

Environments are used to freeze specific interpreter and libraries versions for your projects

If you start a new project and need newer libraries, just create a new environment

You won’t have to worry about breaking the other projects

Environment creation

conda create –name <ENV_NAME> [<PACKAGES_LIST>] [–channel

]

You can also specify additional channels to search for packages (in order)

Example:

conda create –name gt python=3.9 graph-tool pytorch torchvision torchaudio cudatoolkit=11.3 pyg seaborn numpy scipy matplotlib jupyter -c pyg -c pytorch -c nvidia -c anaconda -c conda-forge

Switch environment

conda activate <ENV_NAME>

Example

conda activate gt

Data analysis and visualization libraries

- NumPy

- SciPy

- Matplotlib

- Seaborn

- Pandas

These libraries are general, and can be used also in Network Analysis

Network analysis and visualization libraries

- graph-tool

- PyTorch Geometric (PyG)

NumPy

NumPy is the fundamental package for scientific computing in Python.

NumPy offers new data structures:

- Multidimensional array objects (the ndarray)

- Various derived objects (like masked arrays)

And also a vast assortment of functions:

- Mathematical functions (arithmetic, trigonometric, hyperbolic, rounding, …)

- Sorting, searching, and counting

- Operations on Sets

- Input and output

- Fast Fourier Transform

- Complex numbers handing

- (pseudo) random number generation

NumPy is fast:

- Its core is written in C/C++

- Arrays are stored in contiguous memory locations

It also offers tools for integrating C/C++ code

Many libraries are built on top of NumPy’s arrays and functions.

The ndarray

| Mono-dimensional | Multi-dimensional |

|---|---|

|  |

| |

| |

array([0.23949294, 0.49364534, 0.10055484])

| |

array([[0.45292492, 0.32975629, 0.53797728]])

| |

array([[[9, 1],

[9, 9]],

[[5, 7],

[3, 3]]])

SciPy

SciPy is a collection of mathematical algorithms and convenience functions built on the NumPy library

SciPy is written in C and Fortran, and provides:

- Algorithms for optimization, integration, interpolation, eigenvalue problems, algebraic equations, differential equations, statistics, etc.

- Specialized data structures, such as sparse matrices and k-dimensional trees

- Tools for the interactive Python sessions

SciPy’s main subpackages include:

Data clustering algorithms

Physical and mathematical constants

Fast Fourier Transform routines

Integration and ordinary differential equation solvers

Linear algebra

…

…

N-dimensional image processing

Optimization and root-finding routines

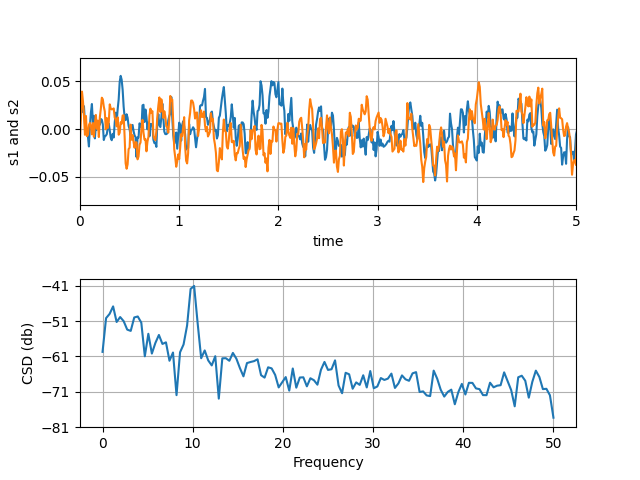

Signal processing

Sparse matrices and associated routines

Spatial data structures and algorithms

Statistical distributions and functions

| |

Sparse matrices

There are many sparse matrices implementations, each optimized for different operations.

For instance:

- Coordinate (COO)

- Linked List Matrix (LIL)

- Compressed Sparse Row (CSR)

- Compressed Sparse Column (CSC)

Check this nice tutorial for more! Sparse matrices tutorial

Pandas

pandas allows easy data organization, filtering, analysis and plotting

pandas provides data structures for “relational” or “labeled” data, for instance:

- Tabular data with heterogeneously-typed columns, as in an Excel spreadsheet

- Ordered and unordered time series data

- Arbitrary matrix data (even heterogeneous typed) with row and column labels

The two primary data structures provided are the:

- Series (1-dimensional)

- DataFrame (2-dimensional)

| Series | DataFrame |

|---|---|

|  |

These structures heavily rely on NumPy and its arrays

pandas integrates well with other libraries built on top of NumPy

Supported file formas

pandas can recover data from/store data to SQL databases, Excel, CSVs…

| |

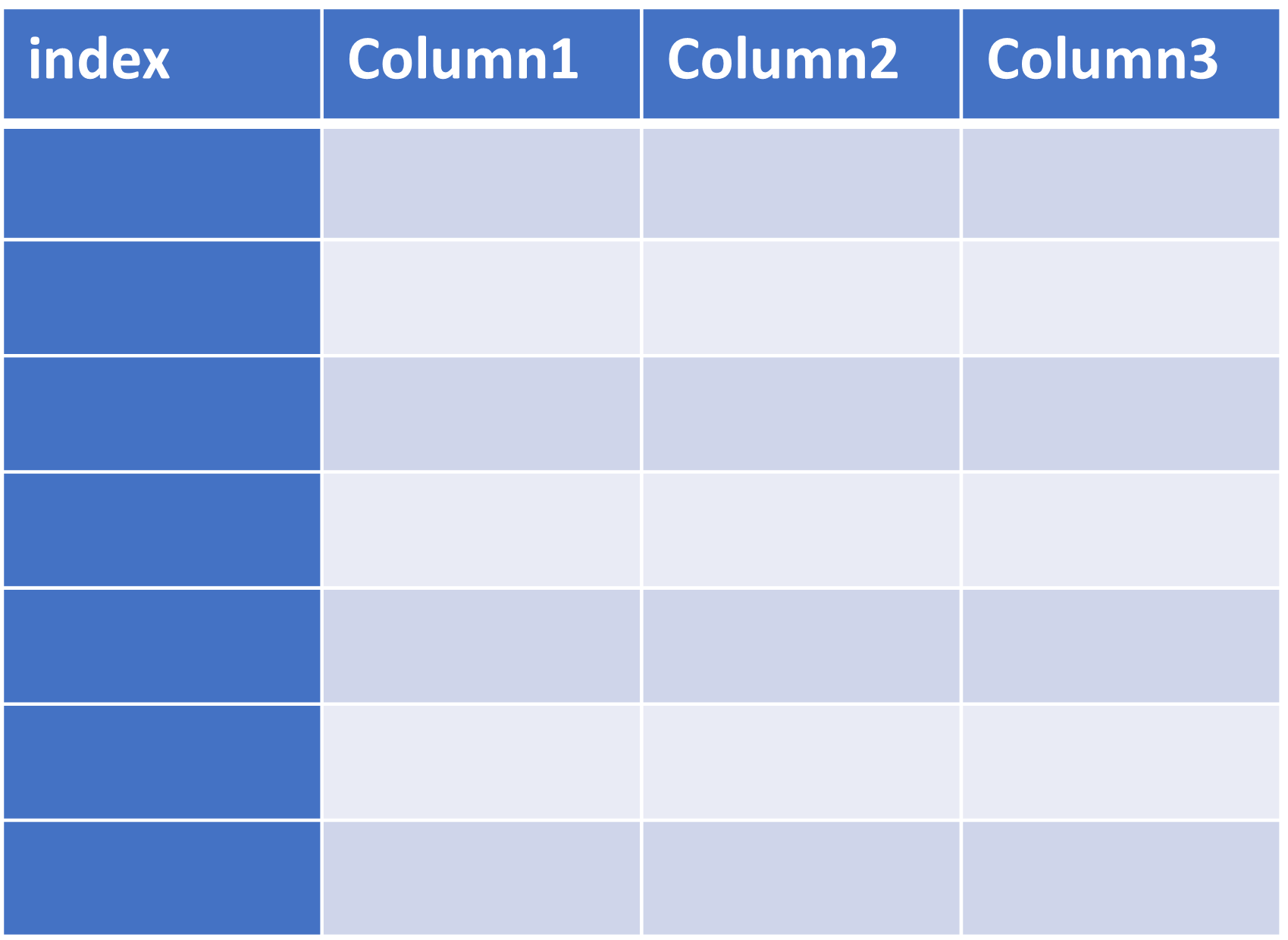

DataFrame example

Penguins example dataset from the Seaborn package

| |

| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | |

|---|---|---|---|---|---|---|---|

| 0 | Adelie | Torgersen | 39.1 | 18.7 | 181.0 | 3750.0 | Male |

| 1 | Adelie | Torgersen | 39.5 | 17.4 | 186.0 | 3800.0 | Female |

| 2 | Adelie | Torgersen | 40.3 | 18.0 | 195.0 | 3250.0 | Female |

| 3 | Adelie | Torgersen | NaN | NaN | NaN | NaN | NaN |

| 4 | Adelie | Torgersen | 36.7 | 19.3 | 193.0 | 3450.0 | Female |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 339 | Gentoo | Biscoe | NaN | NaN | NaN | NaN | NaN |

| 340 | Gentoo | Biscoe | 46.8 | 14.3 | 215.0 | 4850.0 | Female |

| 341 | Gentoo | Biscoe | 50.4 | 15.7 | 222.0 | 5750.0 | Male |

| 342 | Gentoo | Biscoe | 45.2 | 14.8 | 212.0 | 5200.0 | Female |

| 343 | Gentoo | Biscoe | 49.9 | 16.1 | 213.0 | 5400.0 | Male |

344 rows × 7 columns

Series of the species

| |

0 Adelie

1 Adelie

2 Adelie

3 Adelie

4 Adelie

...

339 Gentoo

340 Gentoo

341 Gentoo

342 Gentoo

343 Gentoo

Name: species, Length: 344, dtype: object

Unique species

| |

array(['Adelie', 'Chinstrap', 'Gentoo'], dtype=object)

Average bill length

| |

43.9219298245614

Standard deviation of the bill length

| |

5.4595837139265315

Data filtering for male penguins

| |

0 True

1 False

2 False

3 False

4 False

...

339 False

340 False

341 True

342 False

343 True

Name: sex, Length: 344, dtype: bool

| |

| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | |

|---|---|---|---|---|---|---|---|

| 0 | Adelie | Torgersen | 39.1 | 18.7 | 181.0 | 3750.0 | Male |

| 5 | Adelie | Torgersen | 39.3 | 20.6 | 190.0 | 3650.0 | Male |

| 7 | Adelie | Torgersen | 39.2 | 19.6 | 195.0 | 4675.0 | Male |

| 13 | Adelie | Torgersen | 38.6 | 21.2 | 191.0 | 3800.0 | Male |

| 14 | Adelie | Torgersen | 34.6 | 21.1 | 198.0 | 4400.0 | Male |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 333 | Gentoo | Biscoe | 51.5 | 16.3 | 230.0 | 5500.0 | Male |

| 335 | Gentoo | Biscoe | 55.1 | 16.0 | 230.0 | 5850.0 | Male |

| 337 | Gentoo | Biscoe | 48.8 | 16.2 | 222.0 | 6000.0 | Male |

| 341 | Gentoo | Biscoe | 50.4 | 15.7 | 222.0 | 5750.0 | Male |

| 343 | Gentoo | Biscoe | 49.9 | 16.1 | 213.0 | 5400.0 | Male |

168 rows × 7 columns

Average bill length for male penguins

| |

45.85476190476191

Average bill length and weight for female penguins

| |

bill_length_mm 42.096970

body_mass_g 3862.272727

dtype: float64

Matplotlib

Matplotlib is a comprehensive library for creating static, animated, and interactive visualizations in Python

Some plot examples

From the Matplotlib gallery

Line plots

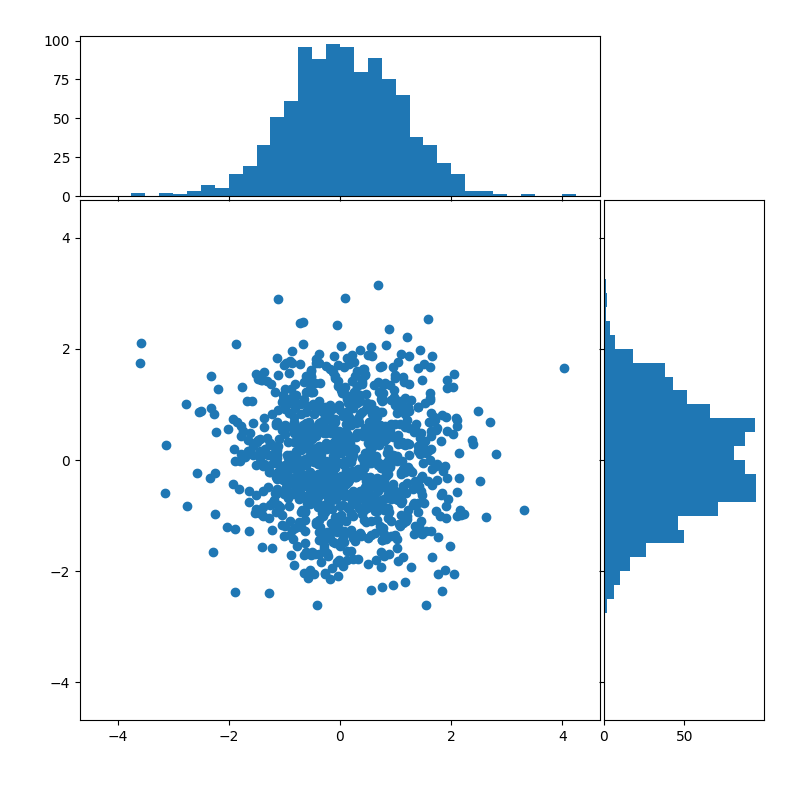

Scatterplots and histograms

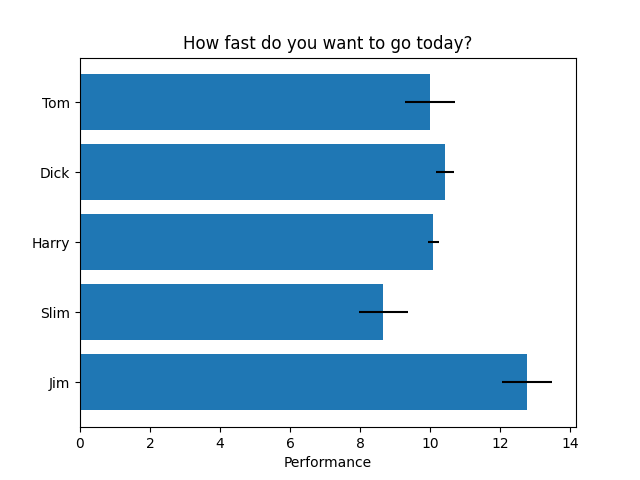

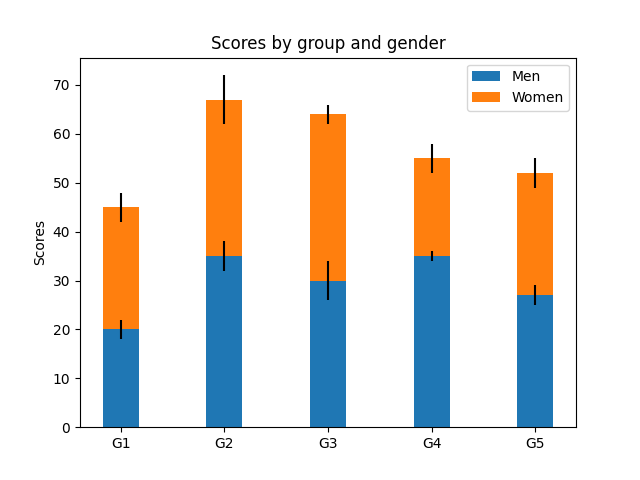

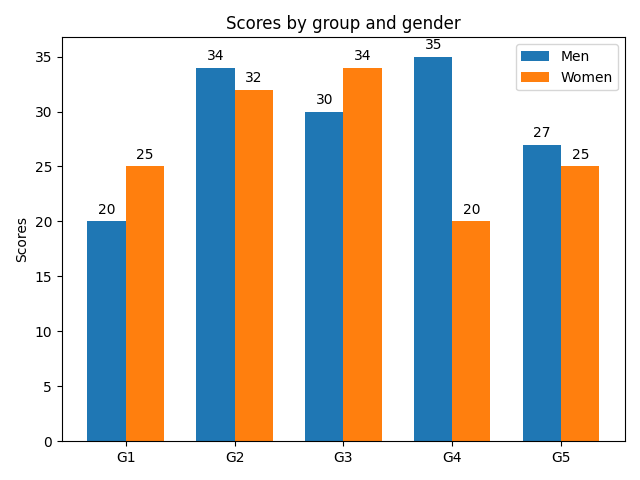

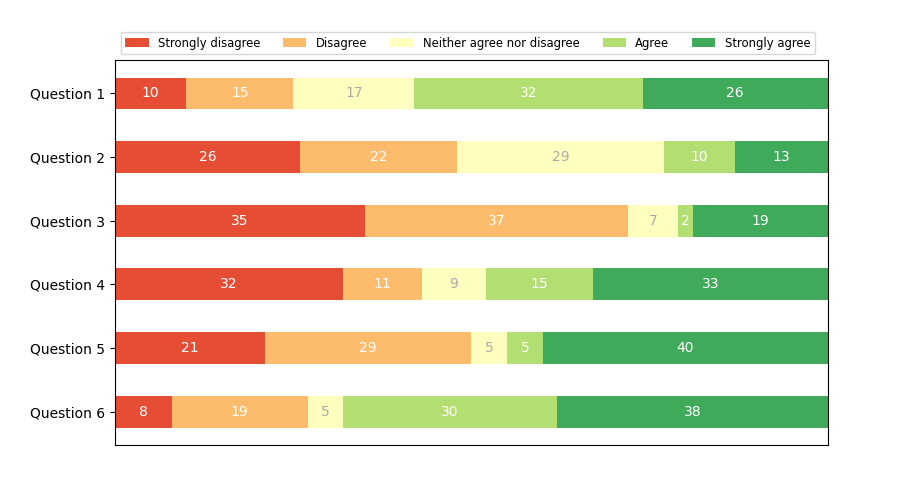

Barplots

Simple barplot

Stacked barplot

Grouped barplot

Horizontal bar chart

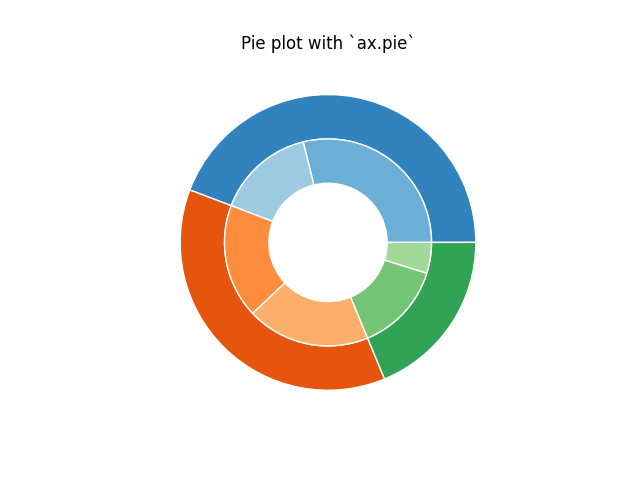

(Nested) pie charts

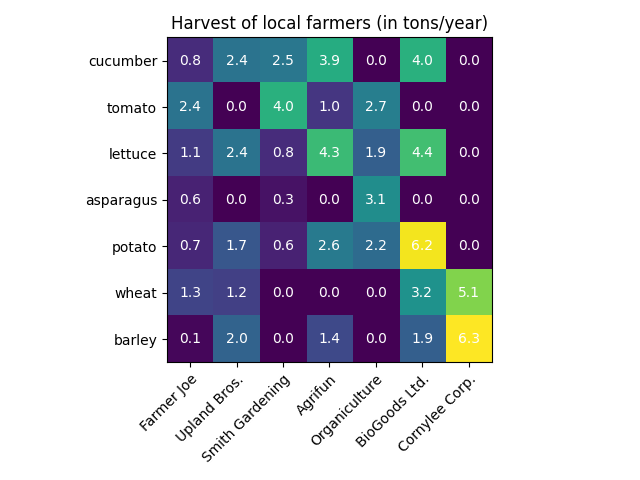

Heatmaps

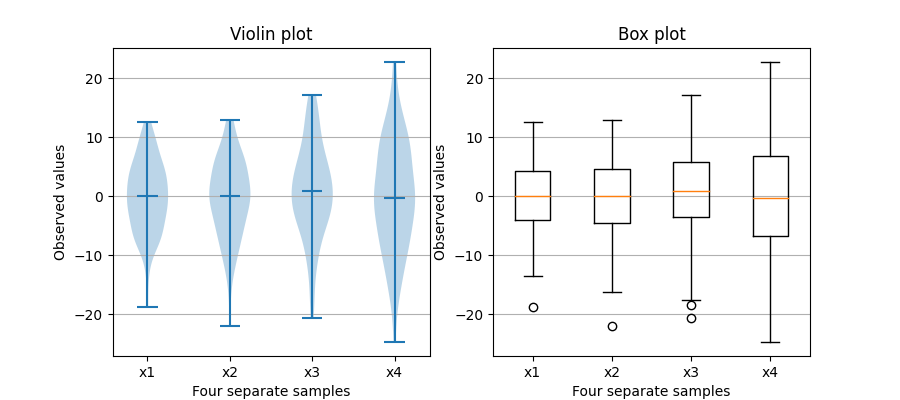

Violin and box plots

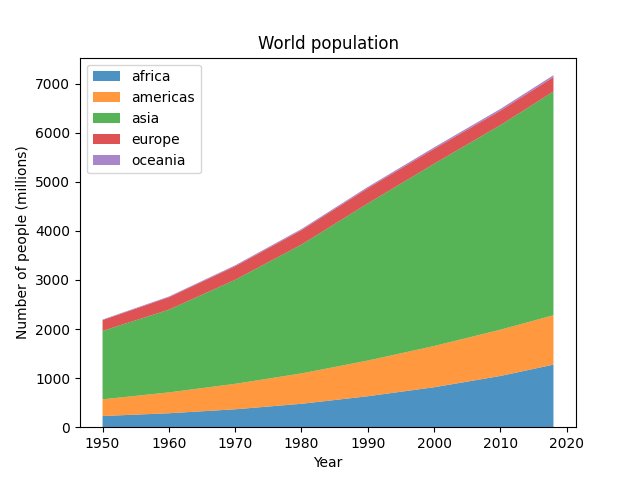

Stackplots

… and many more

| |

Seaborn

Seaborn is a library for making statistical graphics in Python

Thanks to its high-level interface, it makes plotting very complex figures easy

Seaborn builds on top of matplotlib and integrates closely with pandas data structures

| |

It provides helpers to improve how all matplotlib plots look:

- Theme and style

- Colors (even colorblind palettes)

- Scaling, to quickly switch between presentation contexts (e.g., plot, poster and talk)

| |

| |

[<matplotlib.lines.Line2D at 0x7f1ed75972b0>]

| |

| |

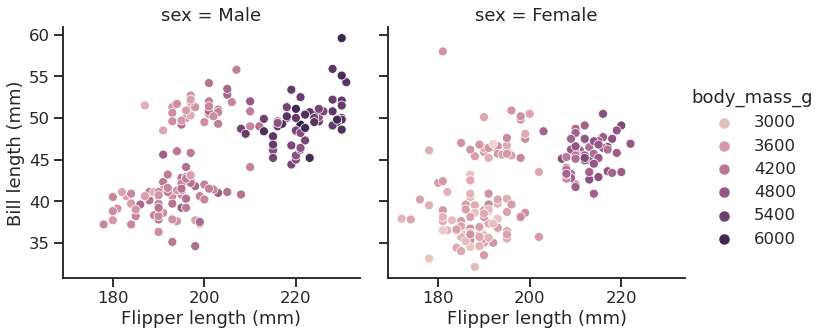

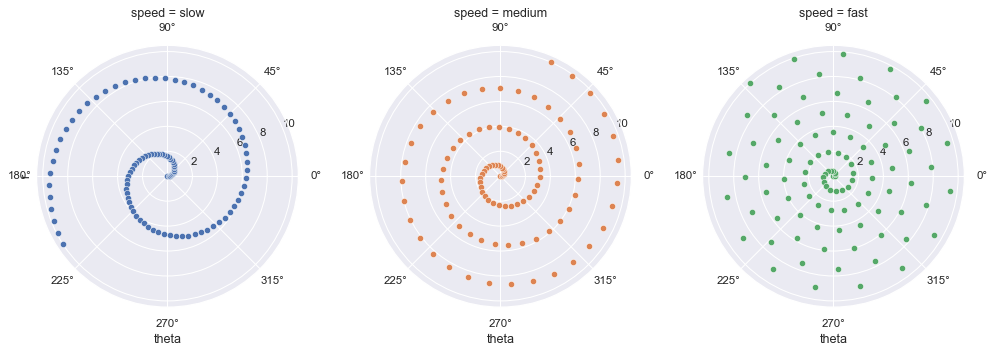

Seaborn’s FacetGrid offers a convenient way to visualize multiple plots in grids

They can be drawn with up to three dimensions: rows, columns and hue

Tutorial: Building structured multi-plot grids

| |

| species | island | bill_length_mm | bill_depth_mm | flipper_length_mm | body_mass_g | sex | |

|---|---|---|---|---|---|---|---|

| 0 | Adelie | Torgersen | 39.1 | 18.7 | 181.0 | 3750.0 | Male |

| 1 | Adelie | Torgersen | 39.5 | 17.4 | 186.0 | 3800.0 | Female |

| 2 | Adelie | Torgersen | 40.3 | 18.0 | 195.0 | 3250.0 | Female |

| 3 | Adelie | Torgersen | NaN | NaN | NaN | NaN | NaN |

| 4 | Adelie | Torgersen | 36.7 | 19.3 | 193.0 | 3450.0 | Female |

| |

<seaborn.axisgrid.FacetGrid at 0x7f1ed7597a90>

| |

<seaborn.axisgrid.FacetGrid at 0x7f1ed73a5f10>

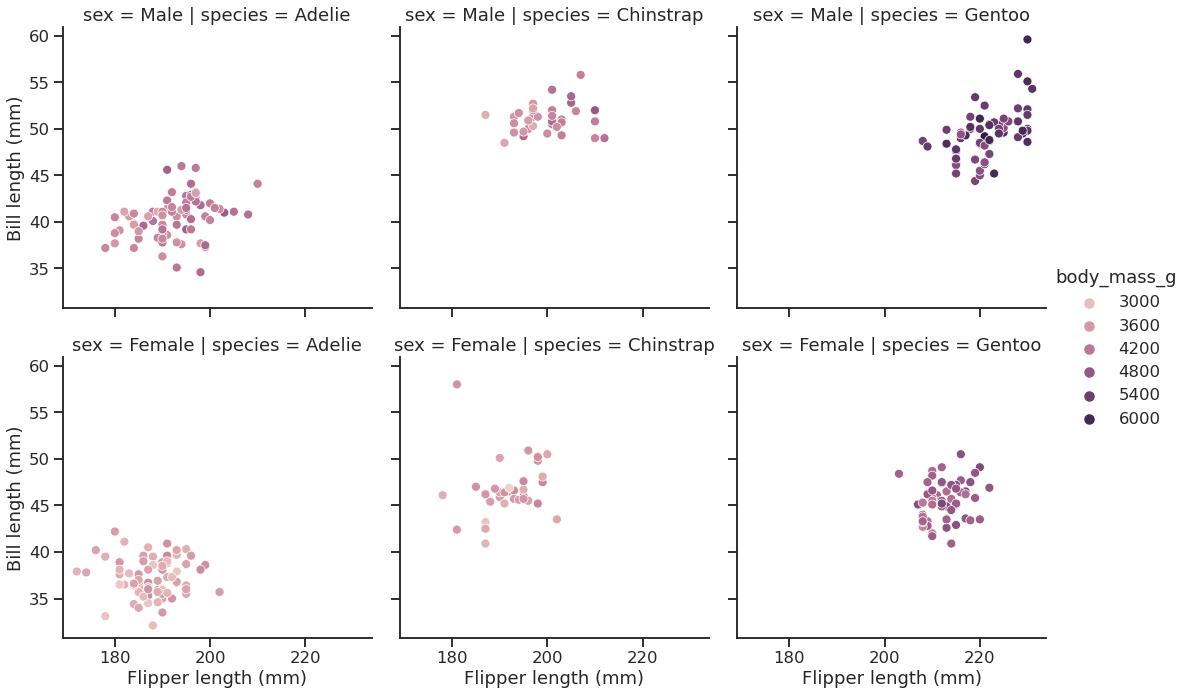

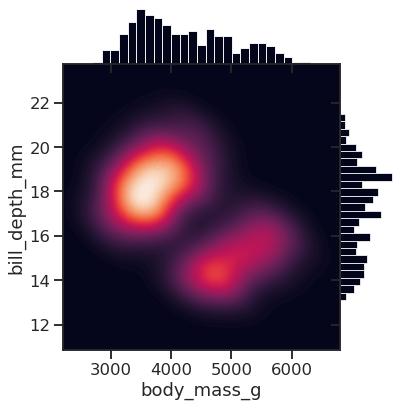

Some more plot examples

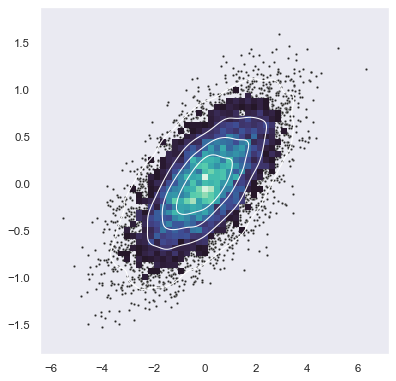

Smooth kernel density with marginal histograms (source)

| |

<seaborn.axisgrid.JointGrid at 0x7f1ed5671ca0>

Joint and marginal histograms

| |

<seaborn.axisgrid.JointGrid at 0x7f1ed4503c70>

Custom projections

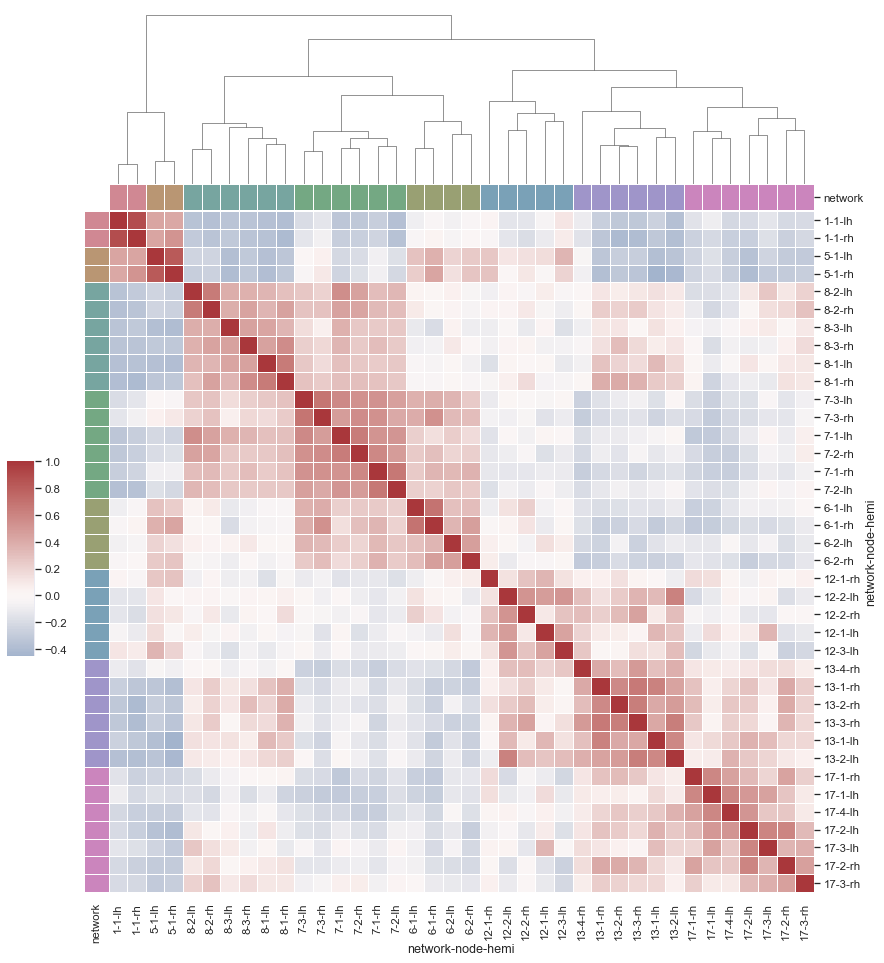

Discovering structure in heatmap data

Bivariate plot with multiple elements

Network Analysis with Python

Three main libraries:

- graph-tool

- networkx

- python-igraph

graph-tool

graph-tool is a graph analysis library for Python

It provides the Graph data structure, and various algorithms

It is mostly written in C++, and based on the Boost Graph Library

It supports multithreading and it is fairly easy to extend

Built in algorithms:

- Topology analysis tools

- Centrality-related algorithms

- Clustering coefficient (transitivity) algorithms

- Correlation algorithms, like the assortativity

- Dynamical processes (e.g., SIR, SIS, …)

- Graph drawing tools

- Random graph generation

- Statistical inference of generative network models

- Spectral properties computation

| |

Performance comparison (source: graph-tool.skewed.de/)

| Algorithm | graph-tool (16 threads) | graph-tool (1 thread) | igraph | NetworkX |

|---|---|---|---|---|

| Single-source shortest path | 0.0023 s | 0.0022 s | 0.0092 s | 0.25 s |

| Global clustering | 0.011 s | 0.025 s | 0.027 s | 7.94 s |

| PageRank | 0.0052 s | 0.022 s | 0.072 s | 1.54 s |

| K-core | 0.0033 s | 0.0036 s | 0.0098 s | 0.72 s |

| Minimum spanning tree | 0.0073 s | 0.0072 s | 0.026 s | 0.64 s |

| Betweenness | 102 s (~1.7 mins) | 331 s (~5.5 mins) | 198 s (vertex) + 439 s (edge) (~ 10.6 mins) | 10297 s (vertex) 13913 s (edge) (~6.7 hours) |

How to load a network

Choose a graph analysis library. The right one mostly depends on your needs (e.g., functions, performance, etc.)

In this warm-up, we will use graph-tool.

To load the network, we need to use the right loader function, which depends on the file format

File formats

Many ways to represent and store graphs.

The most popular ones are:

- edgelist

- GraphML

For more about file types, check the NetworkX documentation

Edgelist (.el, .edge, …)

As the name suggests, it is a list of node pairs (source, target) and edge properties (if any). Edgelists cannot store any information about the nodes, or about the graph (not even about the directedness)

Values may be separated by commas, spaces, tabs, etc. Comments may be supported by the reader function.

Example file:

# source, target, weight

0,1,1

0,2,2

0,3,2

0,4,1

0,5,1

0,6,1

1,18,1

1,3,1

1,4,2

2,0,1

2,25,1

#...

GraphML (.graphml, .xml)

Flexible format based on XML.

It can store hierarchical graphs, information (i.e., attributes or properties) about the graph, the nodes and the edges.

Main drawback: heavy disk usage (space, and I\O time)

Example of the file:

| |

How to load a network

Choose a graph analysis library. The right one mostly depends on your needs (e.g., features, performance, etc.)

In this warm-up, we will use graph-tool.

To load the network, we need to use the right loader function, which depends on the file format

After identifying the file format and the right loader function, we load the network

| |

<Graph object, directed, with 70 vertices and 366 edges, 1 internal vertex property, 1 internal edge property, 7 internal graph properties, at 0x7f1ed41879d0>

| |

{'citation': <GraphPropertyMap object with value type 'string', for Graph 0x7f1ed41879d0, at 0x7f1ed4187220>, 'description': <GraphPropertyMap object with value type 'string', for Graph 0x7f1ed41879d0, at 0x7f1ed4187130>, 'konect_meta': <GraphPropertyMap object with value type 'string', for Graph 0x7f1ed41879d0, at 0x7f1ed4187070>, 'konect_readme': <GraphPropertyMap object with value type 'string', for Graph 0x7f1ed41879d0, at 0x7f1ed41b80a0>, 'name': <GraphPropertyMap object with value type 'string', for Graph 0x7f1ed41879d0, at 0x7f1ed4225070>, 'tags': <GraphPropertyMap object with value type 'vector<string>', for Graph 0x7f1ed41879d0, at 0x7f1ed4225040>, 'url': <GraphPropertyMap object with value type 'string', for Graph 0x7f1ed41879d0, at 0x7f1ed4256d00>}

| |

{'_pos': <VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ed4187730>}

| |

{'weight': <EdgePropertyMap object with value type 'int16_t', for Graph 0x7f1ed41879d0, at 0x7f1ed4291130>}

Some network analysis

Get the number of nodes

| |

'Number of nodes: 70'

Get the number of edges

| |

'Number of edges: 366'

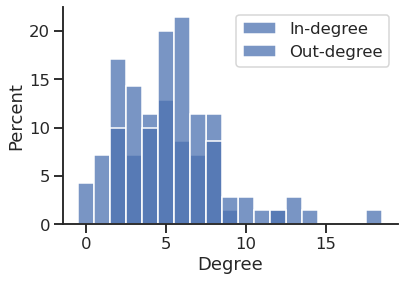

Get the in and out degrees

| |

| |

'Average in degree'

5.228571428571429

| |

| |

'Average out degree'

5.228571428571429

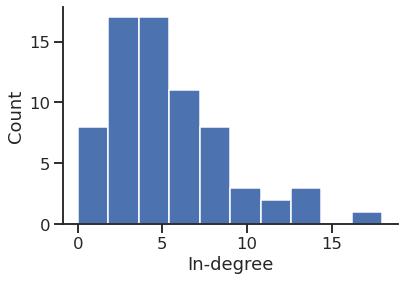

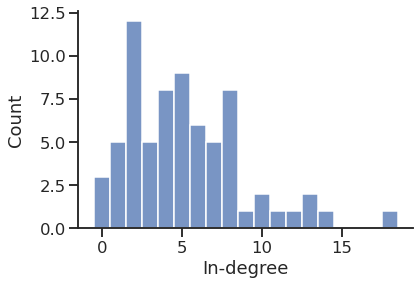

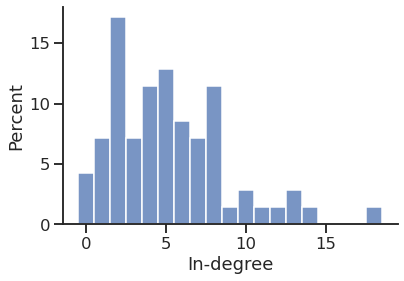

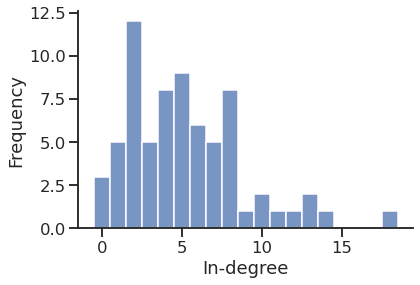

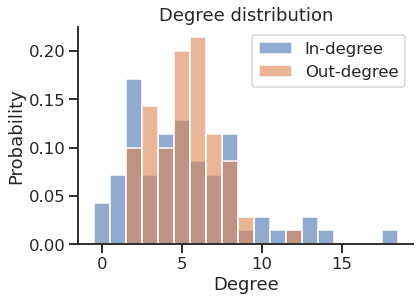

In-degree distribution

| |

| |

| |

| |

| |

| |

| |

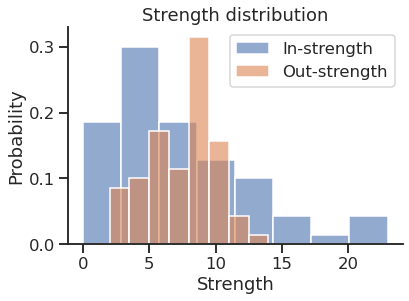

Get the in and out strength

| |

| |

Store the values as a DataFrame

| |

| Degree | Strength | |||

|---|---|---|---|---|

| In | Out | In | Out | |

| 0 | 2 | 6 | 2 | 8 |

| 1 | 2 | 3 | 3 | 4 |

| 2 | 2 | 4 | 3 | 5 |

| 3 | 12 | 6 | 19 | 9 |

| 4 | 13 | 5 | 21 | 9 |

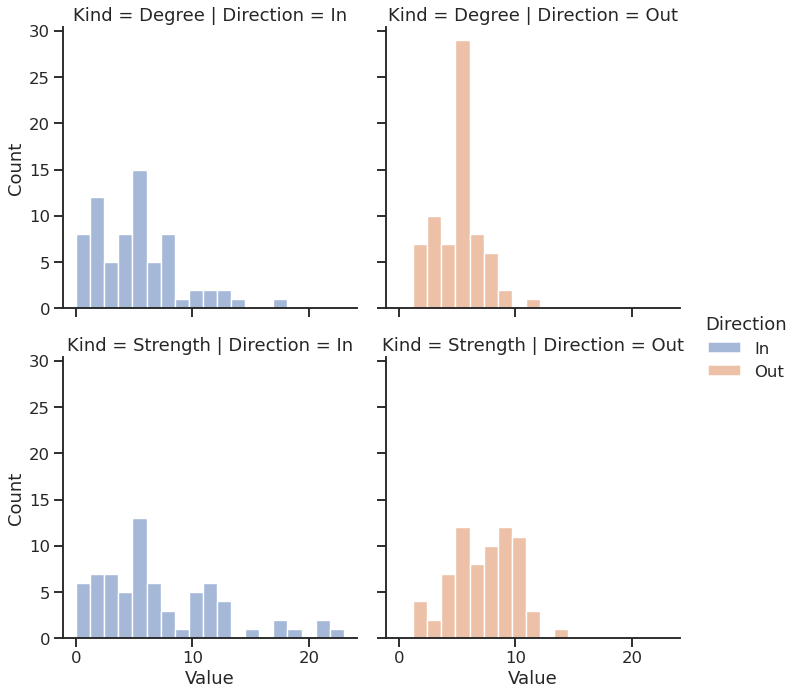

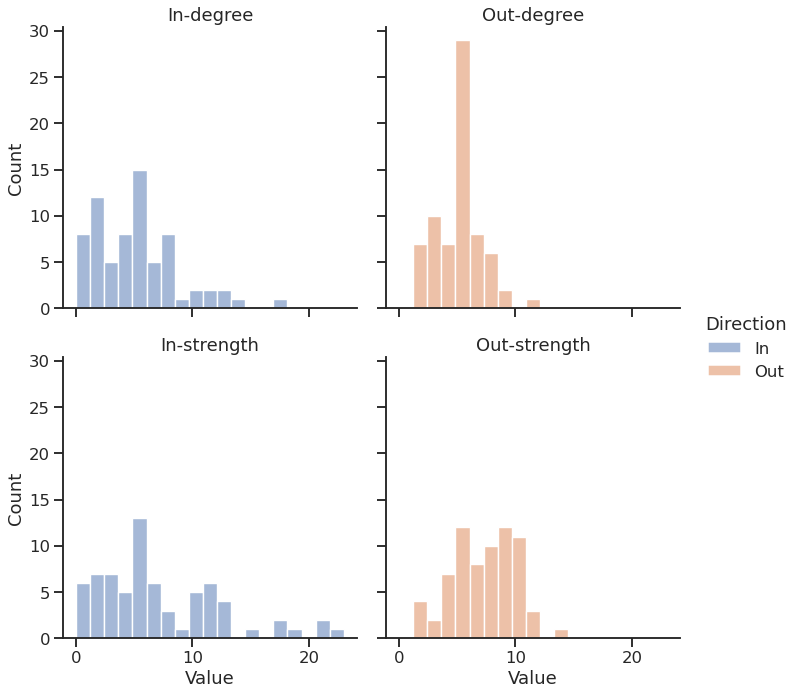

… and plot the DF using Seaborn

| |

| Kind | Direction | Value | |

|---|---|---|---|

| 0 | Degree | In | 2.0 |

| 1 | Degree | In | 2.0 |

| 2 | Degree | In | 2.0 |

| 3 | Degree | In | 12.0 |

| 4 | Degree | In | 13.0 |

| ... | ... | ... | ... |

| 275 | Strength | Out | 7.0 |

| 276 | Strength | Out | 10.0 |

| 277 | Strength | Out | 10.0 |

| 278 | Strength | Out | 4.0 |

| 279 | Strength | Out | 5.0 |

280 rows × 3 columns

| |

| |

<seaborn.axisgrid.FacetGrid at 0x7f1ecf5c6610>

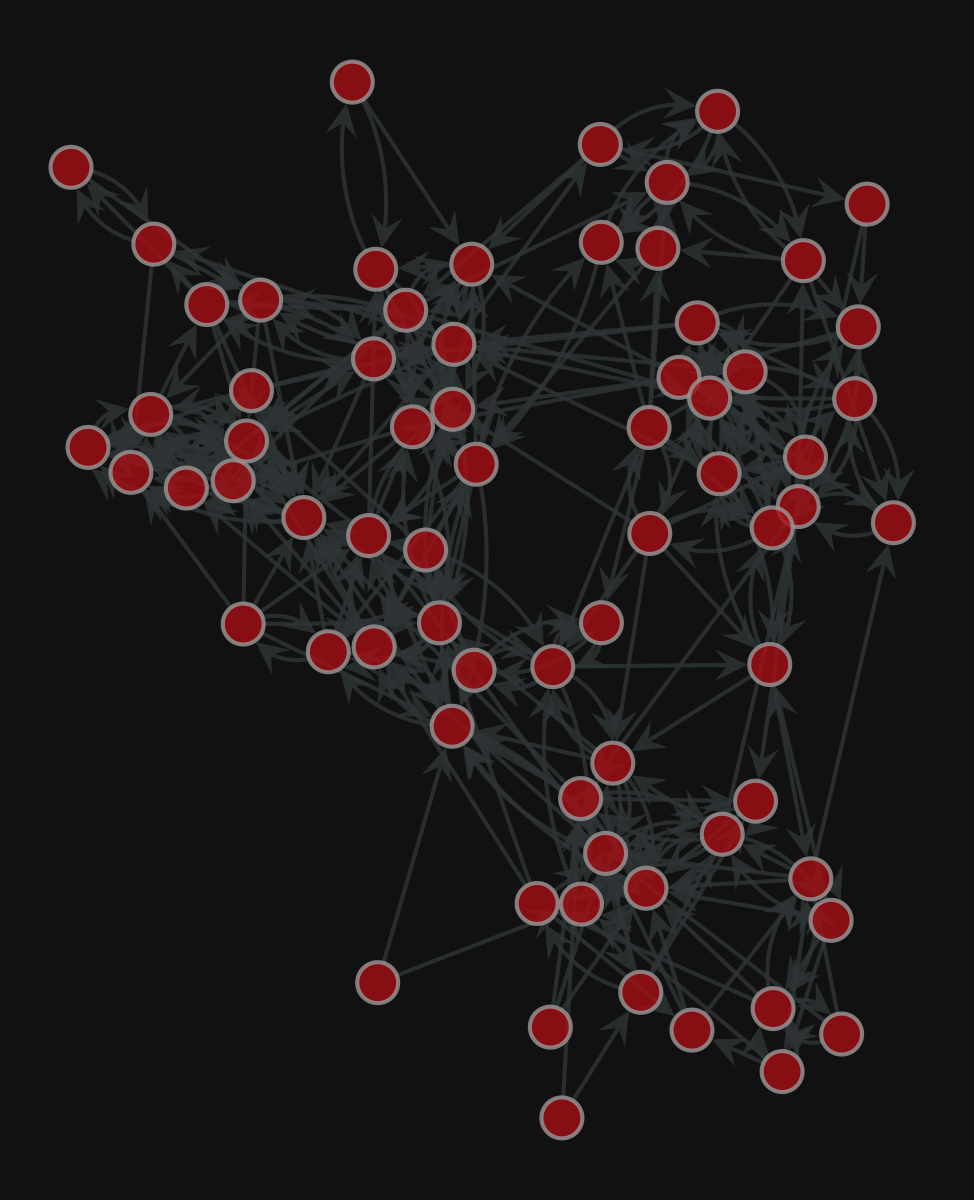

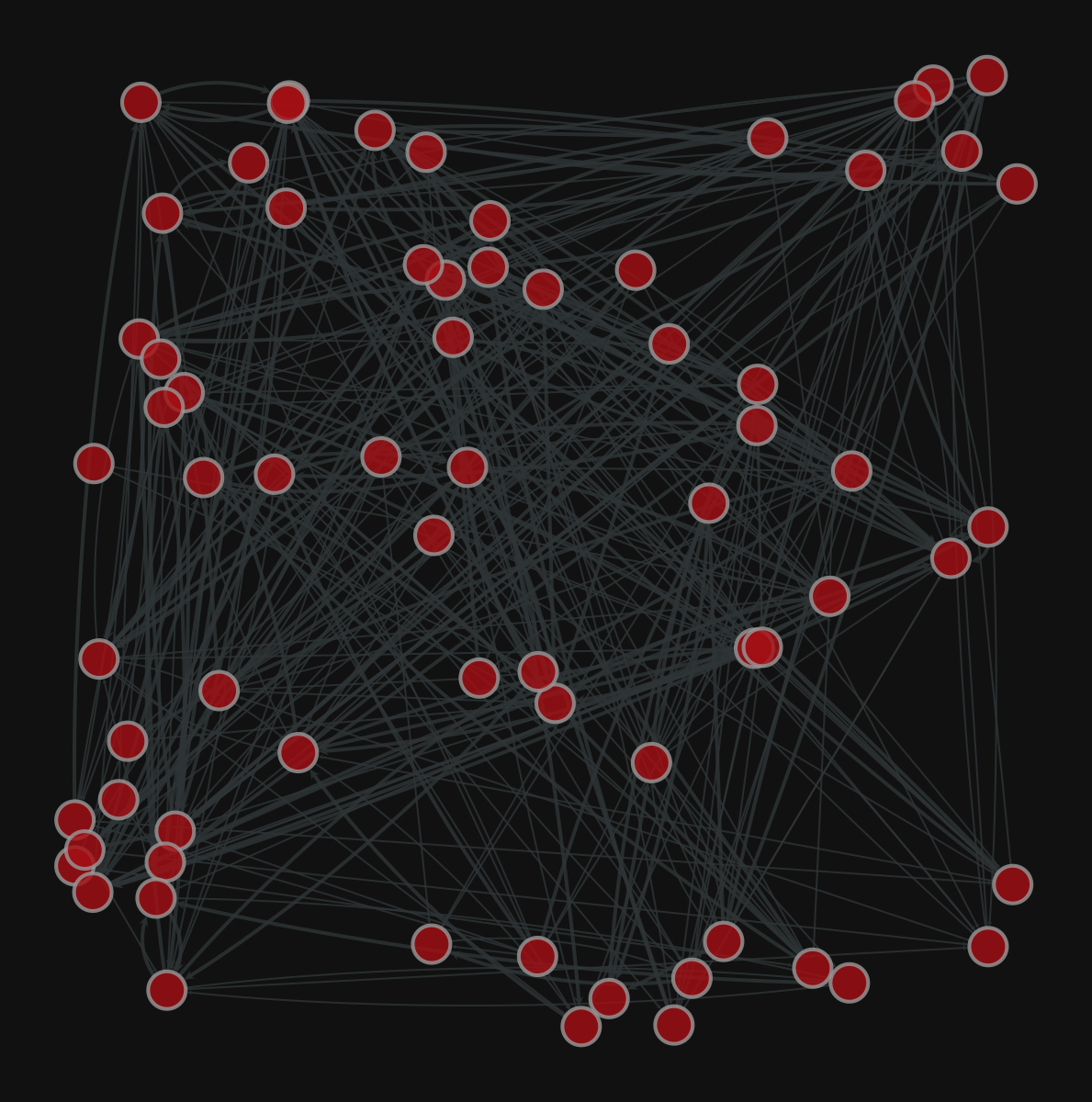

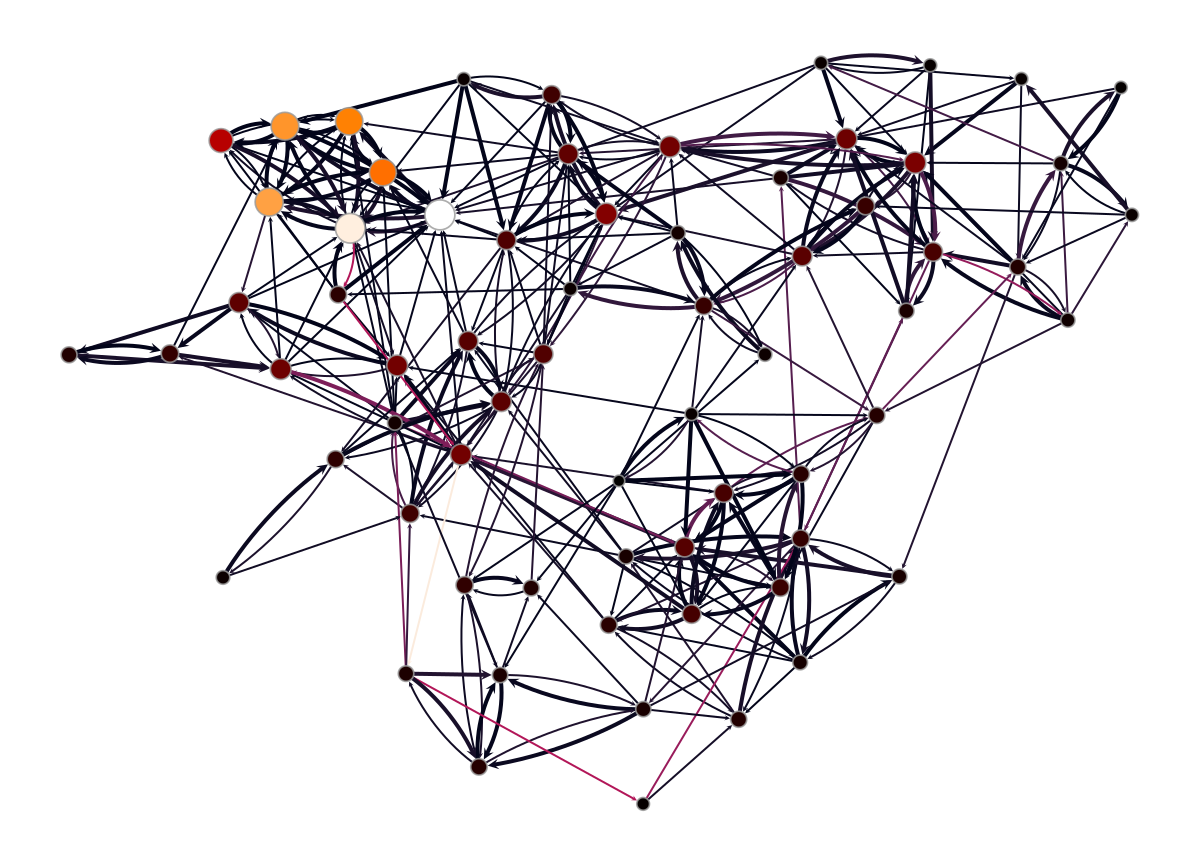

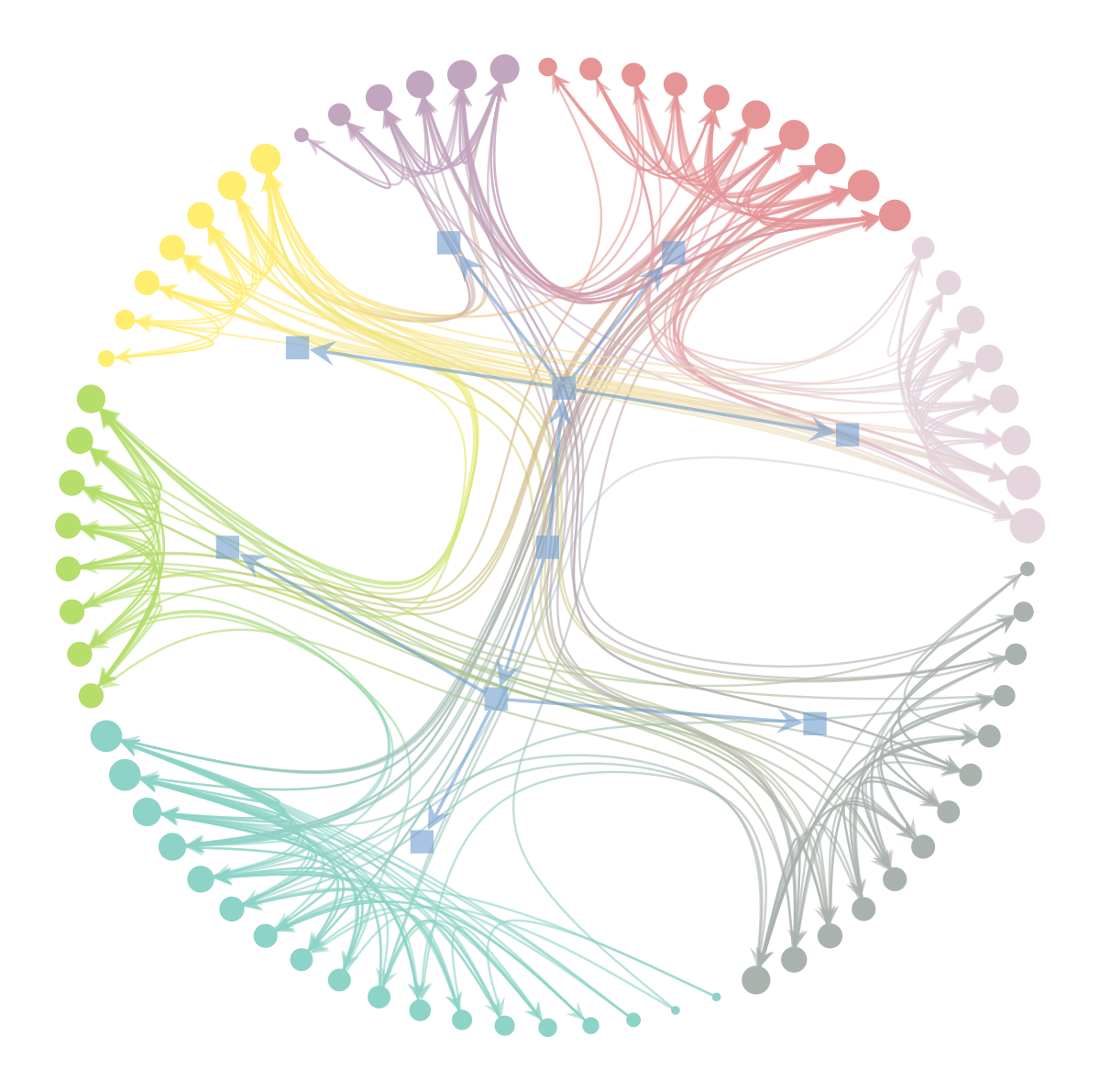

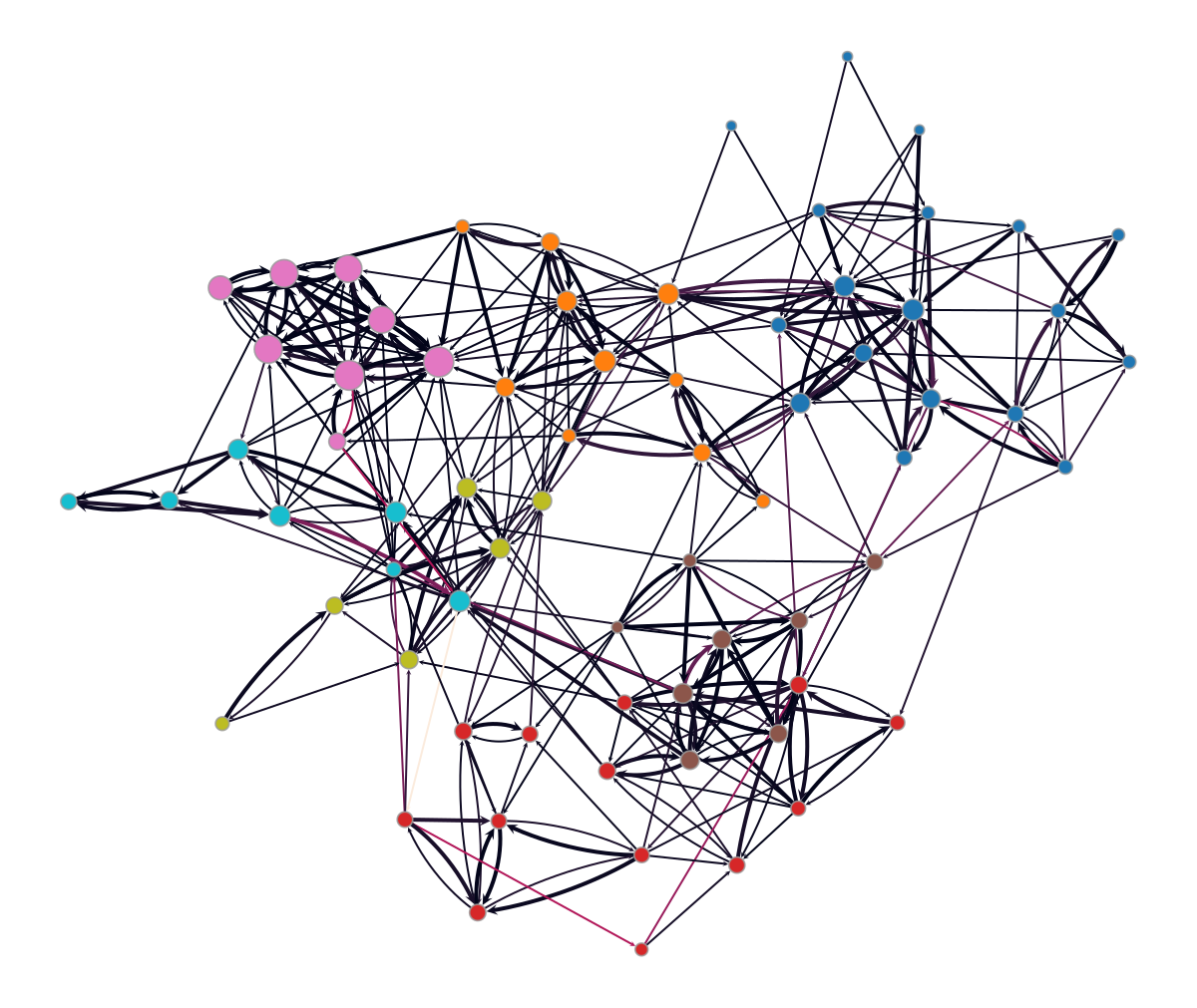

Graph visualization

1. Compute the node layout

| |

2. Plot the network

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ed41312b0>

Add the edge weight

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ecf79e910>

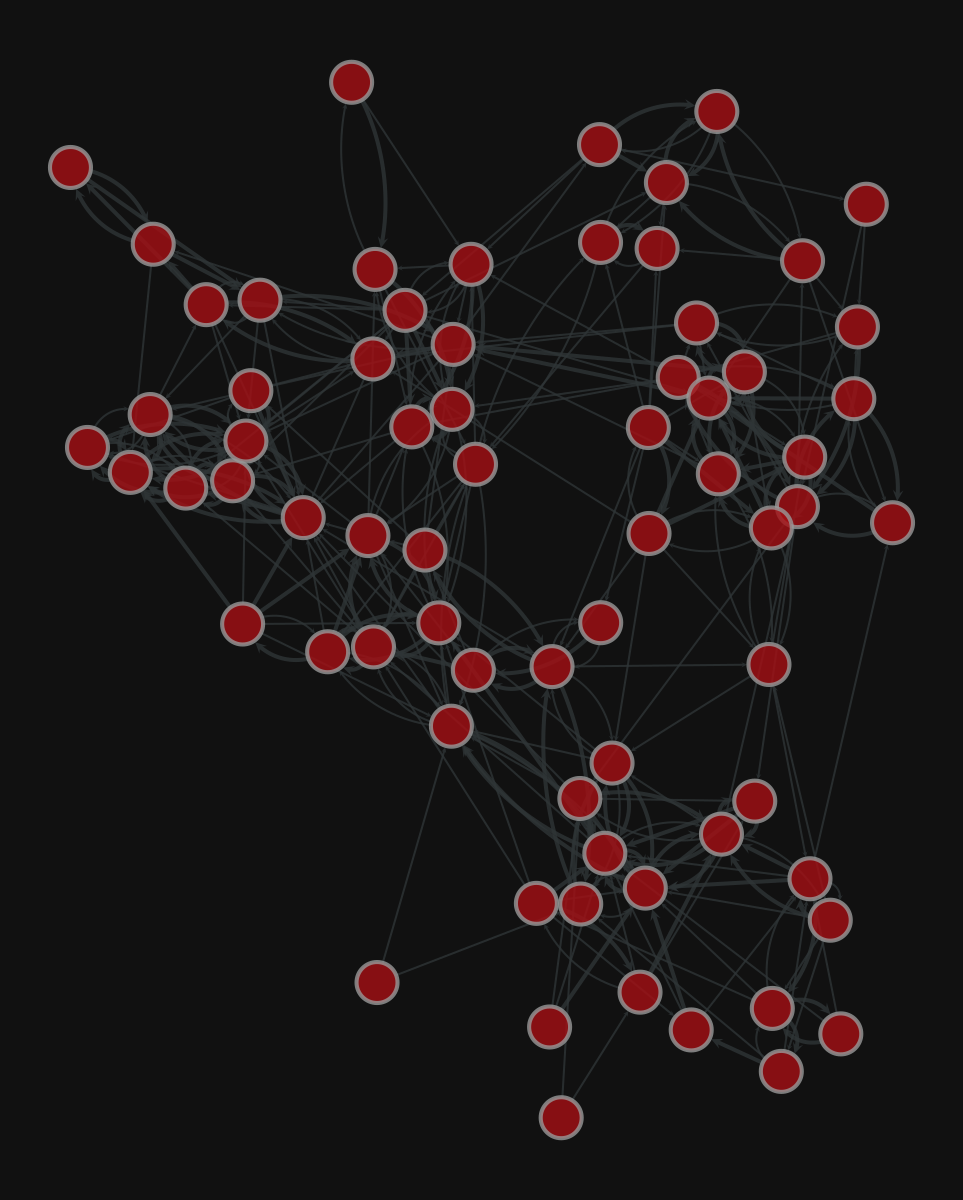

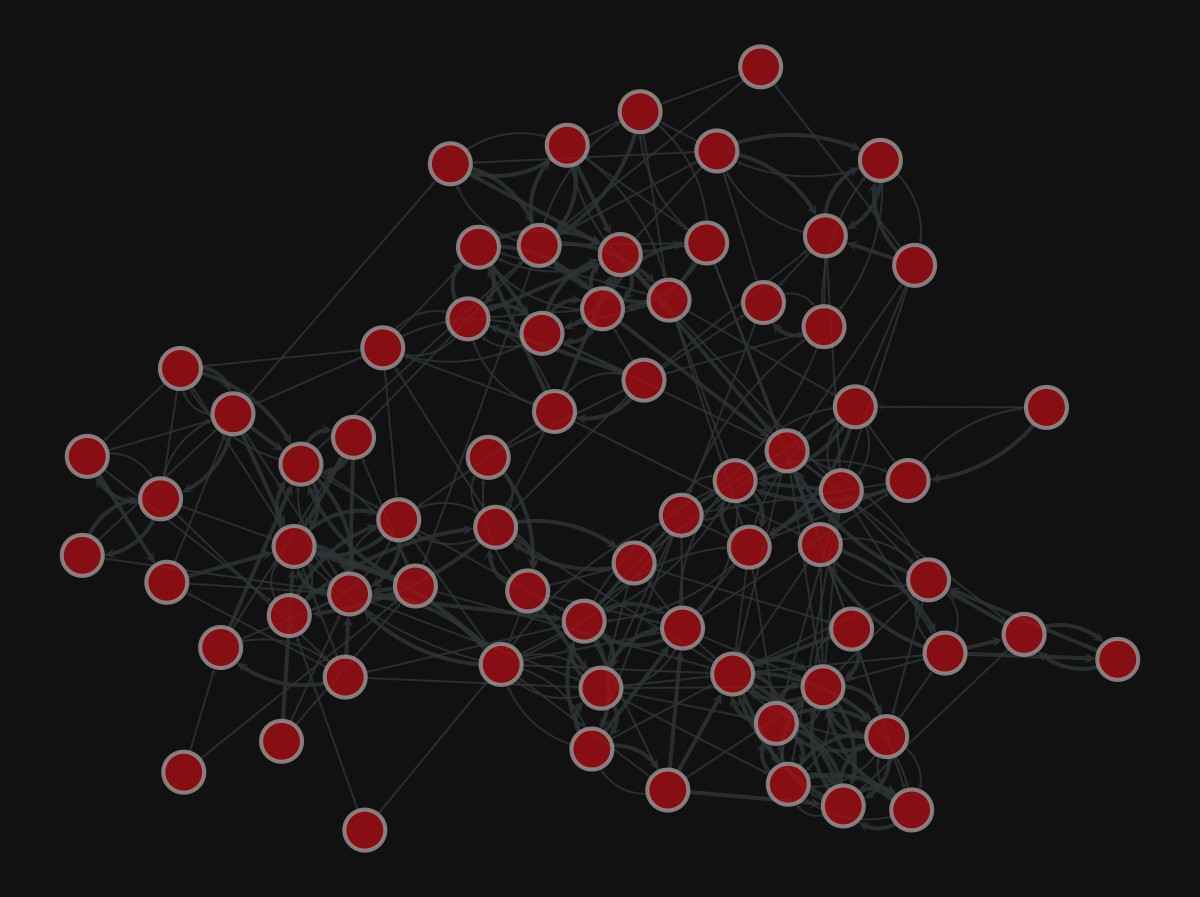

More layouts

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ecf79eca0>

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ed411b9a0>

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ecf645ac0>

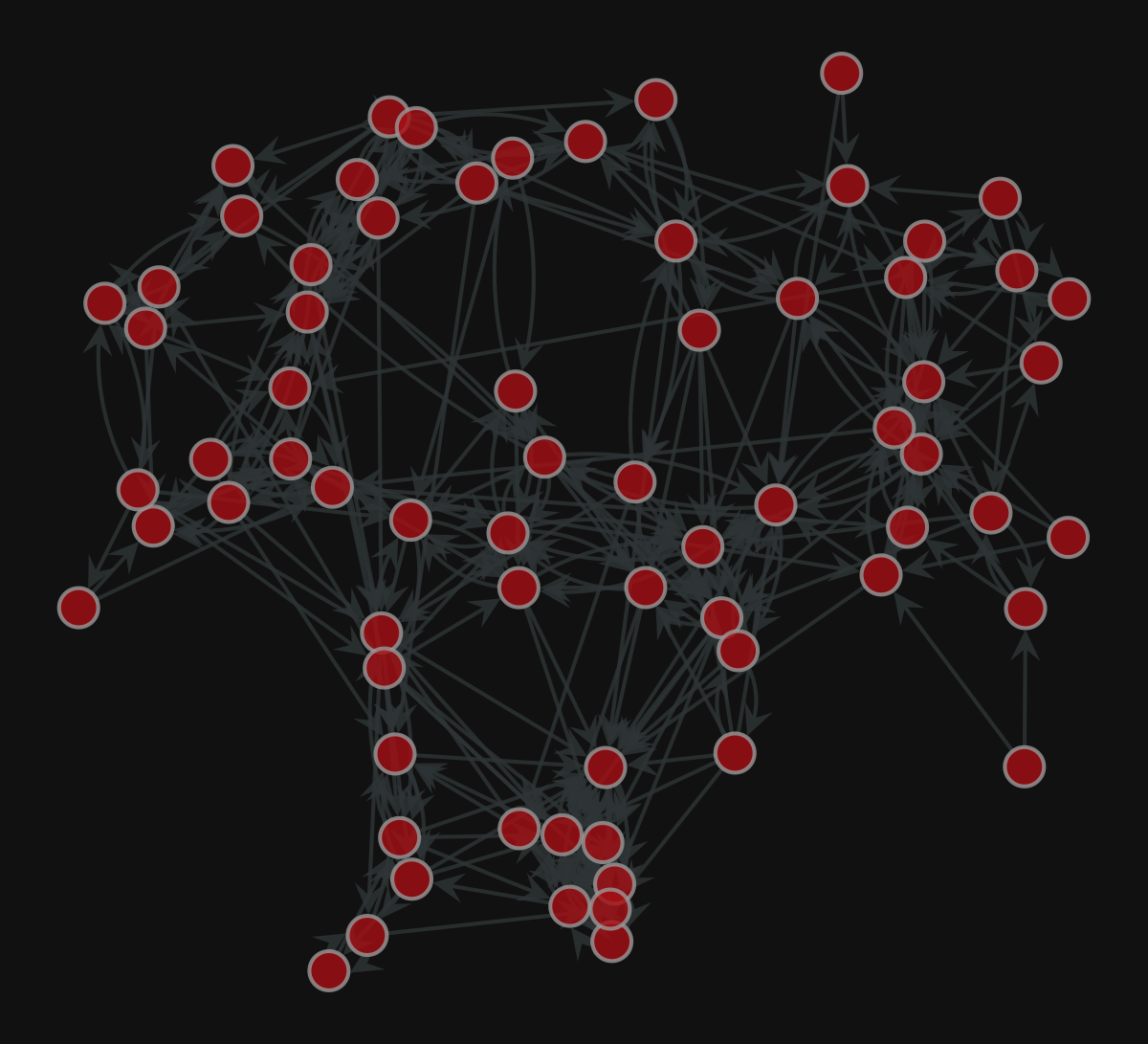

Centrality computation

| |

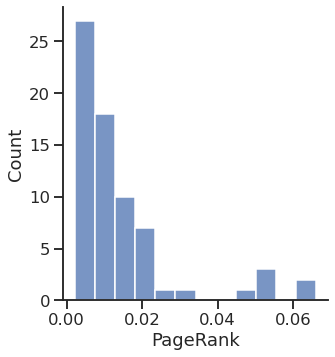

PageRank

| |

| |

Text(0.5, 15.439999999999998, 'PageRank')

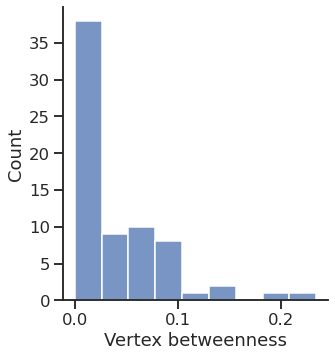

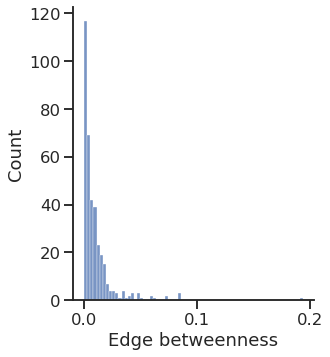

Betweenness

| |

| |

Text(0.5, 15.439999999999998, 'Vertex betweenness')

| |

Text(0.5, 15.439999999999998, 'Edge betweenness')

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed4113fa0, at 0x7f1ed40db340>

Inferring modular structure

| |

| |

(<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ecf7d77c0>,

<GraphView object, directed, with 80 vertices and 79 edges, edges filtered by (<EdgePropertyMap object with value type 'bool', for Graph 0x7f1ecf32dfa0, at 0x7f1ecf338040>, False), vertices filtered by (<VertexPropertyMap object with value type 'bool', for Graph 0x7f1ecf32dfa0, at 0x7f1ecf330ee0>, False), at 0x7f1ecf32dfa0>,

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ecf32dfa0, at 0x7f1ecf330eb0>)

| |

| |

<VertexPropertyMap object with value type 'vector<double>', for Graph 0x7f1ed41879d0, at 0x7f1ecf3c9310>

Add some columns to our DataFrame

| |

| Degree | Strength | PageRank | Betweenness | Block | |||

|---|---|---|---|---|---|---|---|

| In | Out | In | Out | ||||

| 0 | 2 | 6 | 2 | 8 | 0.003871 | 0.024913 | 44 |

| 1 | 2 | 3 | 3 | 4 | 0.003502 | 0.002819 | 44 |

| 2 | 2 | 4 | 3 | 5 | 0.003602 | 0.005649 | 44 |

| 3 | 12 | 6 | 19 | 9 | 0.020530 | 0.081323 | 44 |

| 4 | 13 | 5 | 21 | 9 | 0.022862 | 0.079074 | 44 |

Save the DataFrame

To CSV (Comma Separated Values)

| |

To Excel

| |

PyTorch Geometric (PyG)

PyG is a library built upon PyTorch to easily write and train Graph Neural Networks (GNNs) for a wide range of applications related to structured data.

Library for Deep Learning on graphs

It provides a large collection of GNN and pooling layers

New layers can be created easily

It offers:

- Support for Heterogeneous and Temporal graphs

- Mini-batch loaders

- Multi GPU-support

- DataPipe support

- Distributed graph learning via Quiver

- A large number of common benchmark datasets

- The GraphGym experiment manager

PyTorch Geometric documentation

Introduction by example

Each network is described by an instance of torch_geometric.data.Data, which includes:

- data.x: node feature matrix, with shape [num_nodes, num_node_features]

- data.edge_index: edge list in COO format, with shape [2, num_edges] and type torch.long

- data.edge_attr: edge feature matrix, with shape [num_edges, num_edge_features]

- data.y: target to train against (may have arbitrary shape)

| |

Let’s build our Data object

Node features

We do not have any node feature

We can use a constant for each node, e.g., $1$

| |

torch.Size([70, 1])

tensor([[1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.]])

Connectivity matrix and edge attributes

| |

'edge_index'

torch.Size([2, 366])

'edge_attr'

torch.Size([366])

| |

'edge_index'

tensor([[ 0, 0, 0, 0, 0, 0, 1, 1, 1, 2],

[ 1, 2, 3, 4, 5, 6, 18, 3, 4, 0]])

'edge_attr'

tensor([1., 2., 2., 1., 1., 1., 1., 1., 2., 1.])

Create the Data instance

| |

Data(x=[70, 1], edge_index=[2, 366], edge_attr=[366])

Create the train and test set

For link prediction, we need positive (existent) and negative (non-existent) edges

We can use the RandomLinkSplit class, that does the negative sampling for us

| |

| |

Data(x=[70, 1], edge_index=[2, 235], edge_attr=[235], edge_label=[116], edge_label_index=[2, 116])

| |

Data(x=[70, 1], edge_index=[2, 293], edge_attr=[293], edge_label=[146], edge_label_index=[2, 146])

Create the model

Model architecture:

- 2x GINE convolutional layers

- 1x Multi-Layer Perceptron (MLP)

The GINE layers will compute the node embedding

We can build the edge embedding by, e.g., concatenating the source and target nodes’ embedding

The MLP will take the edge embeddings and return a probability for each

| |

| |

Let’s create the model instance

| |

GINEModel(

(conv1): GINEConv(nn=MLP(1, 20, 20))

(conv2): GINEConv(nn=MLP(20, 20, 20))

(edge_regression): MLP(40, 20, 1)

)

Create the optimizer and loss instances

| |

Define the train function and train the model

| |

Define the test function

| |

Training and testing

| |

24%|███████████████████████████████████████████████████████████████████▏ | 489/2001 [00:02<00:05, 262.96it/s]

'Epoch: 2500, Loss: 0.0881'

50%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ | 991/2001 [00:04<00:04, 211.13it/s]

'Epoch: 3000, Loss: 0.1207'

75%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▋ | 1495/2001 [00:06<00:01, 271.06it/s]

'Epoch: 3500, Loss: 0.0798'

99%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ | 1973/2001 [00:08<00:00, 291.01it/s]

'Epoch: 4000, Loss: 0.0769'

100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2001/2001 [00:08<00:00, 241.67it/s]

| |

Evaluate the model performance

The torchmetrics package provides many performance metrics for various tasks

It is inspired by scikit-learn’s metrics subpackage

| |

'Accuracy'

0.6095890402793884

'AUROC'

0.6100582480430603

Related

- wsGAT: Weighted and Signed Graph Attention Networks for Link Prediction

- mGNN: Generalizing the Graph Neural Networks to the Multilayer Case

- Machine learning dismantling and early-warning signals of disintegration in complex systems

- Insights into countries’ exposure and vulnerability to food trade shocks from network-based simulations

- (Unintended) Consequences of export restrictions on medical goods during the Covid-19 pandemic